Database Replication

1 Why Replicate?

Section titled “1 Why Replicate?”- Availability: Survive server or region failures; fail over to replicas.

- Read Scalability: Spread read traffic across replicas.

- Latency: Serve users from geographically close replicas.

- Backups/Analytics: Offload heavy queries to secondary nodes.

2 Core Terminology

Section titled “2 Core Terminology”- Leader (Primary): Accepts writes; propagates changes to replicas.

- Follower (Replica): Applies writes from leader; typically read-only.

- Replication Lag: Delay between leader commit and follower applying it.

3 Replication Strategies

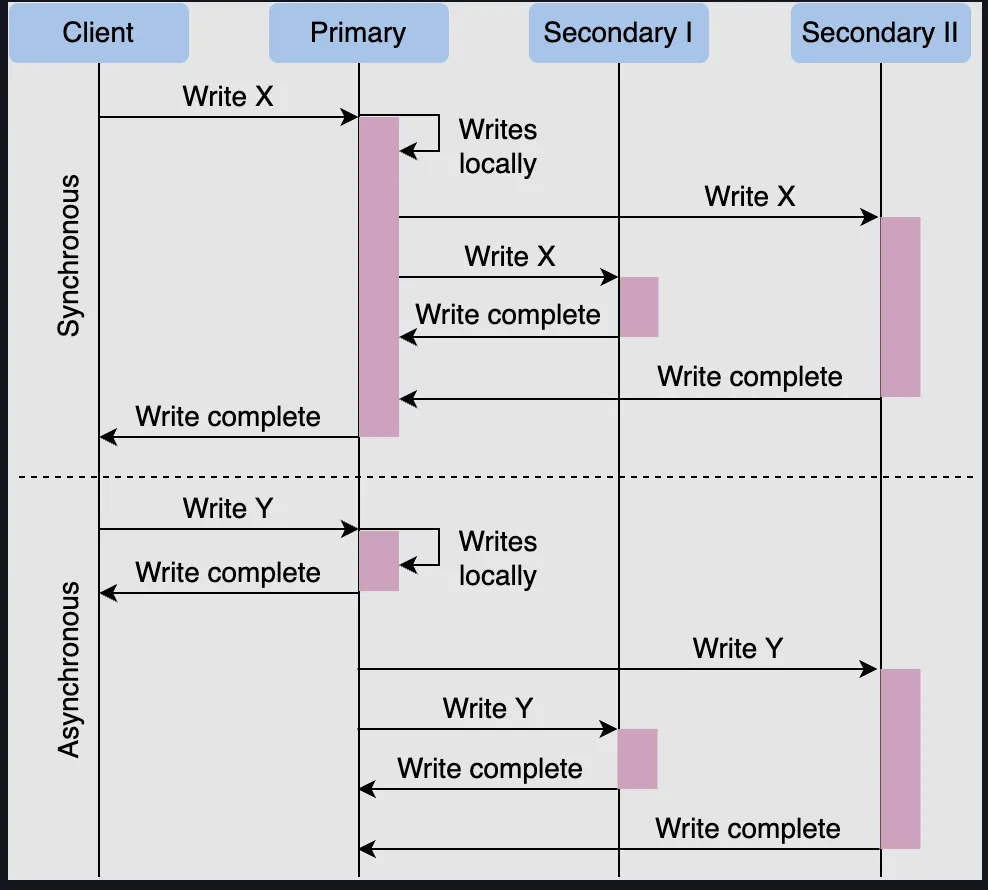

Section titled “3 Replication Strategies”| Strategy | Commit Timing | Pros | Cons |

|---|---|---|---|

| Synchronous | Wait for replicas to acknowledge before commit | Strong consistency | Higher latency; if replicas fail, writes block |

| Asynchronous | Leader commits immediately, followers update later | Low write latency | Risk of data loss if leader fails before replicas apply changes |

4 Scope of Replication

Section titled “4 Scope of Replication”- Full replication: Every node stores complete dataset. High availability; higher storage costs.

- Partial replication: Each replica stores a subset. Saves space; queries may need multiple replicas.

5 Architectures

Section titled “5 Architectures”Single-Leader (Master-Slave / Active-Passive)

Section titled “Single-Leader (Master-Slave / Active-Passive)”- Writes go to leader; followers replicate asynchronously/synchronously.

- Promote a follower on leader failure.

- Good for read-heavy workloads.

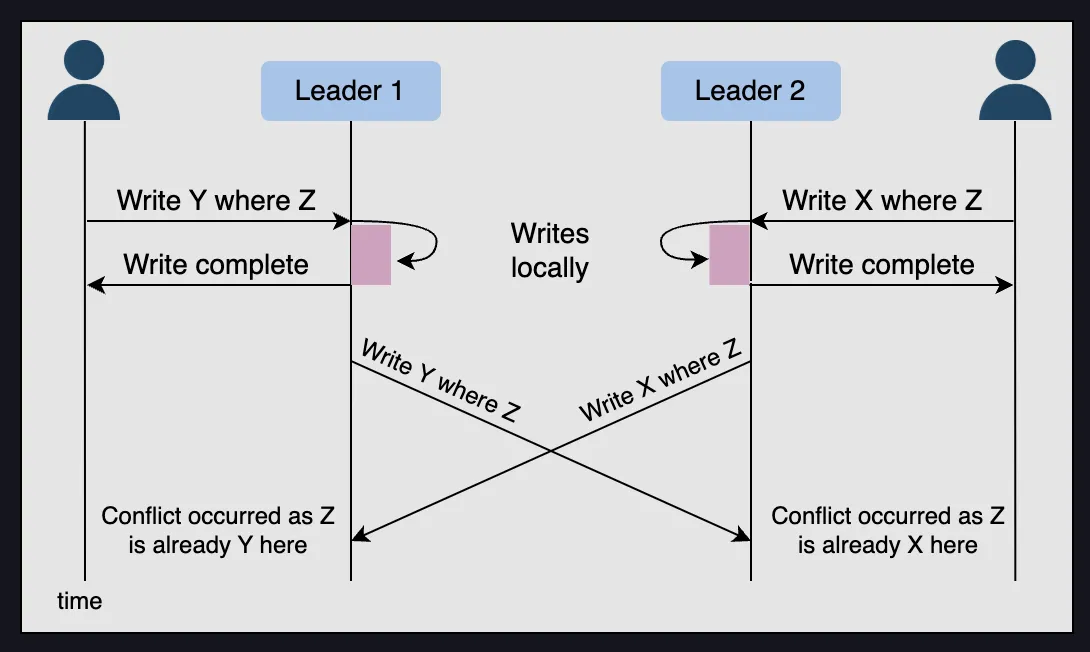

Multi-Leader (Master-Master / Active-Active)

Section titled “Multi-Leader (Master-Master / Active-Active)”- Multiple nodes accept writes; replicate changes among leaders.

- Useful for multi-region write workloads.

- Requires conflict detection and resolution.

Conflict Example

Section titled “Conflict Example”

Leaderless (No Single Primary)

Section titled “Leaderless (No Single Primary)”- Clients write to multiple replicas directly.

- Systems like DynamoDB, Cassandra rely on quorum reads/writes.

- Highly available but complex conflict resolution and consistency tuning.

6 Trade-offs

Section titled “6 Trade-offs”- Consistency vs. Availability: CAP theorem applies; async replication + network partitions lead to eventual consistency.

- Operational Overhead: Monitor lag, handle failovers, keep configs in sync.

- Write Conflicts: Multi-leader setups need robust reconciliation policies.

7 Best Practices

Section titled “7 Best Practices”- Monitor replication lag and alert on thresholds.

- Automate failover; run regular drills.

- Co-locate replicas close to users but account for legal/compliance requirements.

- Document conflict resolution rules for multi-leader deployments.

- Replication boosts resilience and read performance, but you must manage lag and conflicts.

- Single-leader fits read-heavy apps; multi-leader fits geo-distributed writes; leaderless offers high availability with eventual consistency.

- Pair with Distributed transaction handling when writes span replicas or services.